Smallcombe Carl

Technical User

Hi

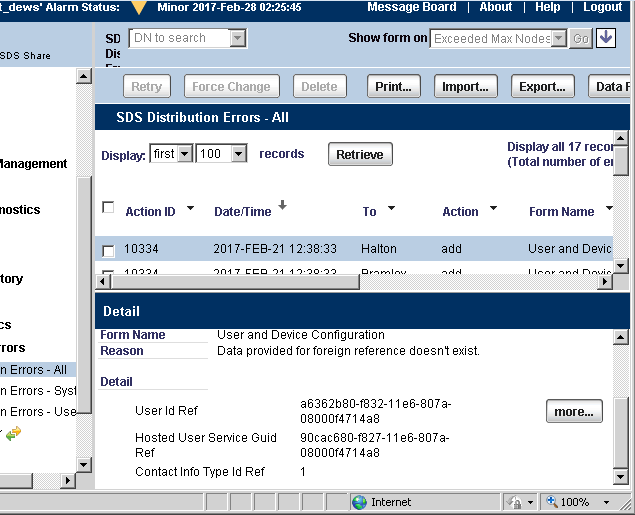

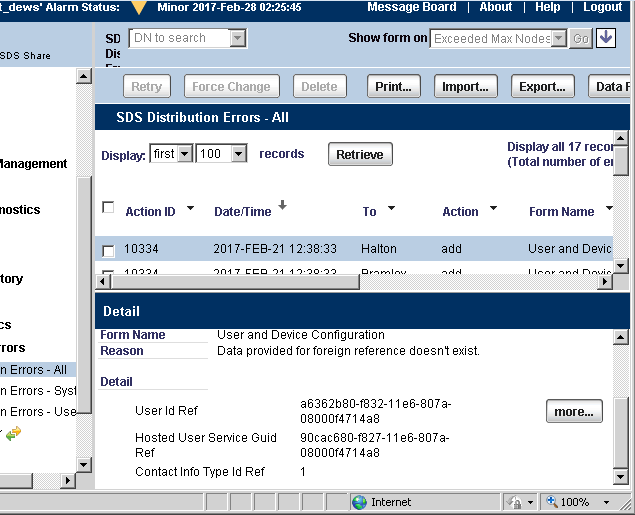

Customer has created SDS errors to which i cannot resolve by programming. The cluster has 30 odd sites and 16 are effected. I tried debug but the issue persisted when creating the number.

Any ideas please.

Customer has created SDS errors to which i cannot resolve by programming. The cluster has 30 odd sites and 16 are effected. I tried debug but the issue persisted when creating the number.

Any ideas please.