Hi,

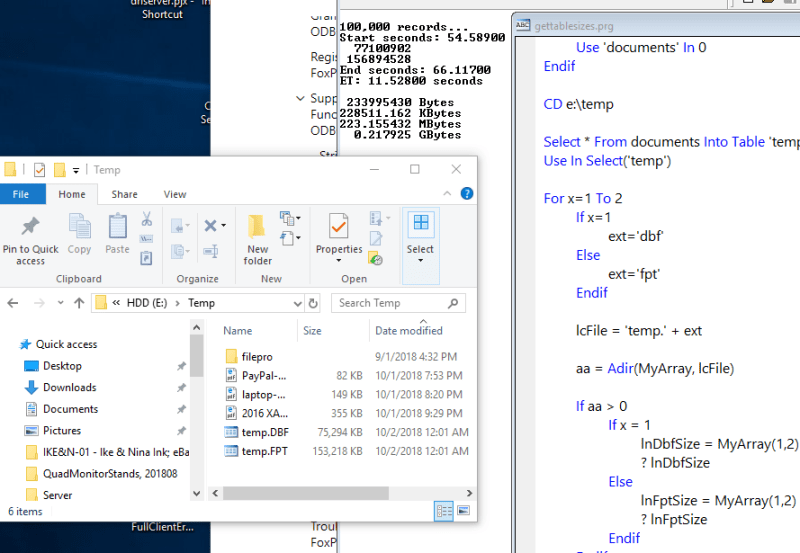

Any ideas on best approach on collecting as much data through put and/or meter it as a user uses the system. The system is a document management system where the data and images are stored in SQL remotely. Our new billing model will based on a bucket system, where the user purchases a bucket of X size to be consumed within a defined period. Therefore, I need to journal outgoing query sizes and their returned result sizes as well as image sizes that are actually returned.

Also wondering if there is some sort of control/software that I can place in the app where all data flowing in and out of the app will be passing thru it, and of-course be able to communicate with it.

This topic is more about discussing pros and cons of how to do this, so please, if you see an issue, lets talk about it...

Thanks,

Stanley

Any ideas on best approach on collecting as much data through put and/or meter it as a user uses the system. The system is a document management system where the data and images are stored in SQL remotely. Our new billing model will based on a bucket system, where the user purchases a bucket of X size to be consumed within a defined period. Therefore, I need to journal outgoing query sizes and their returned result sizes as well as image sizes that are actually returned.

Also wondering if there is some sort of control/software that I can place in the app where all data flowing in and out of the app will be passing thru it, and of-course be able to communicate with it.

This topic is more about discussing pros and cons of how to do this, so please, if you see an issue, lets talk about it...

Thanks,

Stanley