[URL unfurl="true"]https://res.cloudinary.com/engineering-com/raw/upload/v1566692107/tips/Tek_Tips_20190824_Sumproduct_tnpwsl.docx[/url]

Have an MS Excel 2016 file with the following data.

[PRE]

Equip Code Sale Date Sale Price

12345 1/25/2019 8000

12346 1/26/2019 10000

89023 1/18/2019 24569

88456 2/25/2019 25000

88457 2/1/2019 13000

11567 1/12/2019 24000

11567 2/13/2019 53000

24680 1/10/2019 21000

24680 2/9/2019 14000

24699 1/5/2019 45257

12345 2/19/2019 61000

12346 1/5/2019 117500

89023 2/27/2019 25600

88456 2/20/2019 10222

88457 1/5/2019 30000

11567 1/3/2019 125000

[/PRE]

[PRE]

Sale Price

0 - < 25K 25K - < 50K 50K+

"Unique

Equip Code" 1/1/2019 2/1/2019 1/1/2019 2/1/2019 1/1/2019 2/1/2019

12345 =SUMPRODUCT(--($C$5:$C$20>0),--($C$5:$C$20<25000),--(YEAR($B$5:$B$20)=2019),--(MONTH($B$5:$B$20)=1))

12346 5

89023 5

88456 5

88457 5

11567 5

24680 5

24699 5

12345 5

[/PRE]

Using sumproduct formula to count the number of equipment by the month of the Sale Date and Sale Price for the respective equipment code.

Column A contains Equipment Code, Column B contains the Sale Date and Column C contain the Sale Price.

At Column E, I have a column that has a unique list of equipment codes. Inserting the sumproduct beginning at column F.

So far, the sumproduct formula below appears to correctly count the number of equipment that has a sale price > 0 and less than $25,000 with a sale date in 2019 and during the month of January.

However, I would like to include an additional filter on the equipment code.

Any insight as to how the sumproduct formula needs to be modified so that I am able to count the records by month, year, and sales price in increments of $25,000 for the respective equipment codes?

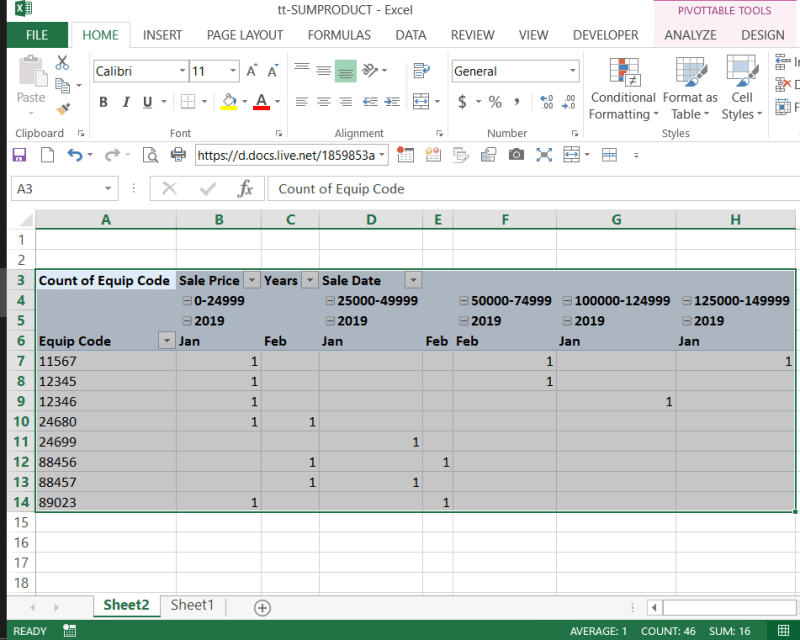

Note, I did initially attempted to perform via MS Excel pivot table but I was not able to group the data by month.

Also reviewed the use of the Aggregate function but really think that sumproduct is the preferred method to use.

Appreciate any additional insight as to if there is a more optimal method to accomplish the objective.

Have an MS Excel 2016 file with the following data.

[PRE]

Equip Code Sale Date Sale Price

12345 1/25/2019 8000

12346 1/26/2019 10000

89023 1/18/2019 24569

88456 2/25/2019 25000

88457 2/1/2019 13000

11567 1/12/2019 24000

11567 2/13/2019 53000

24680 1/10/2019 21000

24680 2/9/2019 14000

24699 1/5/2019 45257

12345 2/19/2019 61000

12346 1/5/2019 117500

89023 2/27/2019 25600

88456 2/20/2019 10222

88457 1/5/2019 30000

11567 1/3/2019 125000

[/PRE]

[PRE]

Sale Price

0 - < 25K 25K - < 50K 50K+

"Unique

Equip Code" 1/1/2019 2/1/2019 1/1/2019 2/1/2019 1/1/2019 2/1/2019

12345 =SUMPRODUCT(--($C$5:$C$20>0),--($C$5:$C$20<25000),--(YEAR($B$5:$B$20)=2019),--(MONTH($B$5:$B$20)=1))

12346 5

89023 5

88456 5

88457 5

11567 5

24680 5

24699 5

12345 5

[/PRE]

Using sumproduct formula to count the number of equipment by the month of the Sale Date and Sale Price for the respective equipment code.

Column A contains Equipment Code, Column B contains the Sale Date and Column C contain the Sale Price.

At Column E, I have a column that has a unique list of equipment codes. Inserting the sumproduct beginning at column F.

So far, the sumproduct formula below appears to correctly count the number of equipment that has a sale price > 0 and less than $25,000 with a sale date in 2019 and during the month of January.

Code:

Original version of sumproduct without restriction on Equipment Code

=SUMPRODUCT(--($C$5:$C$20>0),--($C$5:$C$20<25000),--(YEAR($B$5:$B$20)=2019),--(MONTH($B$5:$B$20)=1))However, I would like to include an additional filter on the equipment code.

Code:

Have tried a revised version of the sumproduct formula as displayed below, but receive zeroes.

=SUMPRODUCT(--($C$5:$C$20>0),--($C$5:$C$20<25000),--(YEAR($B$5:$B$20)=2019),--(MONTH($B$5:$B$20)=1), --($A$5:$A$20=$E5),)Any insight as to how the sumproduct formula needs to be modified so that I am able to count the records by month, year, and sales price in increments of $25,000 for the respective equipment codes?

Note, I did initially attempted to perform via MS Excel pivot table but I was not able to group the data by month.

Also reviewed the use of the Aggregate function but really think that sumproduct is the preferred method to use.

Appreciate any additional insight as to if there is a more optimal method to accomplish the objective.

![[glasses] [glasses] [glasses]](/data/assets/smilies/glasses.gif) Just traded in my OLD subtlety...

Just traded in my OLD subtlety...![[tongue] [tongue] [tongue]](/data/assets/smilies/tongue.gif)